Running an A/B test without tracking the right metrics is like baking a cake without checking the oven temperature—you might get lucky, but most of the time, the results won’t turn out as expected.

A/B testing is a powerful way to optimize your website, but choosing the wrong metrics can lead to misleading conclusions. A test might show a higher click-through rate, but if those clicks don’t lead to actual conversions, was it really a success?

This guide will help you understand which metrics matter most for A/B testing, how to track them, and when to ignore vanity numbers that don’t actually help your business.

Why Choosing the Right Metrics Matters

Not all metrics tell the full story. If you only focus on one number, you might miss the bigger picture.

For example:

- A button colour change increases clicks, but if users don’t complete the checkout, was it really an improvement?

- A new homepage layout reduces bounce rate, but if sales don’t increase, does it matter?

Choosing the right A/B testing metrics ensures that your decisions are based on real improvements, not just surface-level changes.

Key Metrics to Track in A/B Testing

The right metrics depend on what you’re testing. Here’s a breakdown of the most important ones and when to use them.

1. Conversion Rate (The Gold Standard)

What it is: The percentage of visitors who complete a desired action (purchase, sign-up, form submission, etc.).

When to track it: Always. Conversion rate is the ultimate measure of whether an A/B test actually improves business performance.

Example:

- Testing a new checkout button? Track the purchase conversion rate.

- Testing a sign-up form? Track the email sign-up conversion rate.

2. Click-Through Rate (CTR)

What it is: The percentage of users who click on a specific element, like a CTA button or an ad.

When to track it: When testing elements like CTA buttons, headlines, or product recommendations to see if they encourage engagement.

Example:

- Testing different CTA button text? Check if the new version gets more clicks.

- Testing a new homepage layout? See if more people click through to product pages.

Warning: A higher CTR doesn’t always mean success—if users click but don’t convert, something else might be the issue.

3. Bounce Rate

What it is: The percentage of visitors who leave after viewing just one page.

When to track it: When testing homepage design, landing pages, or navigation to see if changes keep users engaged.

Example:

- A new homepage layout reduced the bounce rate by 10%—but did those visitors buy anything?

- A shorter blog post made people leave faster, but did it improve engagement elsewhere?

Warning: A lower bounce rate is good, but only if those users take meaningful actions afterwards.

4. Time on Page (Engagement Indicator)

What it is: The average time visitors spend on a page before leaving.

When to track it: When testing content-heavy pages like blogs, product descriptions, or landing pages.

Example:

- A new product page layout increased time on page—but did it also increase conversions?

- A longer article kept people reading longer, but did they sign up or share it?

Warning: More time on page isn’t always good—sometimes it means users are confused and can’t find what they need.

5. Cart Abandonment Rate

What it is: The percentage of users who add items to their cart but don’t complete the purchase.

When to track it: When testing checkout flow, pricing, or trust signals (like free shipping badges).

Example:

- A one-page checkout reduced cart abandonment by 15%.

- Adding a money-back guarantee to the checkout page led to more completed purchases.

6. Average Order Value (AOV)

What it is: The average amount spent per order.

When to track it: When testing upsells, bundles, or pricing strategies.

Example:

- A discount increased sales, but AOV dropped because people bought fewer items.

- Adding a “Frequently Bought Together” section increased AOV by 20%.

Warning: More purchases are great, but not if they come at the expense of revenue per order.

7. Scroll Depth

What it is: How far down a page visitors scroll.

When to track it: When testing content-heavy pages, landing pages, or long product descriptions.

Example:

- A new video at the top of the page made fewer people scroll—but those who watched it converted more.

- A revised blog post kept readers engaged longer, but did they sign up for the newsletter?

Metrics You Should Ignore

Not all numbers are useful for A/B testing. Some might make you feel good but don’t drive meaningful decisions.

1. Page Views

More visitors don’t mean a better website. Focus on engagement and conversions, not just traffic.

2. Social Shares

Likes and shares look nice, but unless they lead to more sales or sign-ups, they don’t mean much.

3. Open Rates (for Email Testing)

A higher open rate is great, but if people don’t click through or convert, it doesn’t really matter.

How to Choose the Right Metrics for Your A/B Test

Choosing the right metric depends on your goal.

- Testing a CTA button? → Measure CTR and conversion rate.

- Testing a checkout page? → Measure cart abandonment rate and AOV.

- Testing a product page layout? → Measure bounce rate, time on page, and conversions.

- Testing a landing page? → Measure scroll depth, engagement, and form submissions.

Rule of Thumb: If a metric doesn’t help you make a decision, it’s not worth tracking.

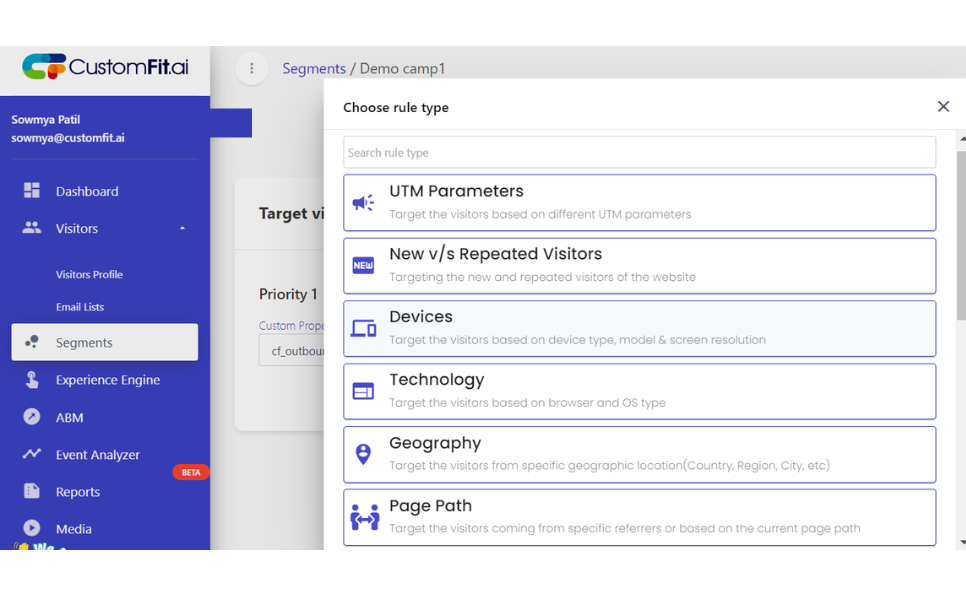

How CustomFit.ai Helps You Track the Right Metrics

A/B testing is only valuable if you can track meaningful results. CustomFit.ai makes this easier by:

- Automatically tracking key metrics like conversions, CTR, and bounce rate.

- Segmenting data based on device type, location, or traffic source.

- Providing clear insights so you know what’s working (and what’s not).

Instead of drowning in data, CustomFit.ai helps you focus on what actually matters.

FAQs: Choosing A/B Testing Metrics

1. What’s the most important metric for A/B testing?

Conversion rate is usually the most important because it directly impacts sales and sign-ups.

2. Can I track multiple metrics in one A/B test?

Yes, but focus on one primary metric to avoid confusion. Supporting metrics help provide context.

3. What if my test improves one metric but hurts another?

Prioritize what’s best for your business. If CTR increases but conversions drop, the new design might be attracting clicks but not the right kind of engagement.

4. How long should I track these metrics before making a decision?

Run your test until you have enough traffic and statistical significance—usually 1-2 weeks for most websites.

5. Can I ignore bounce rate if my conversions go up?

Yes. If sales are increasing, a slightly higher bounce rate isn’t necessarily a problem.

Final Thoughts

A/B testing isn’t about getting more clicks or lower bounce rates—it’s about making real improvements to your business.

Choosing the right metrics ensures that every test gives meaningful insights so you can make better decisions and get real results.

Want an easier way to track A/B testing performance? Try CustomFit.ai and focus on the metrics that matter.